In 2005 Ted Patrick posted a great article on the frame execution model inside the Flash Player that he dubbed the ‘elastic racetrack‘. It’s served as a great reference for me over the years to help understand how code execution and rendering were balanced within the processing of a frame. Since the introduction of Flash Player 9 and the new AVM2, I’ve noticed a few changes to the elastic racetrack model and thought I’d share them. This information is based on research into Flash player internals as well as observations I’ve made playing around with the event and rendering model, but the full model hasn’t been confirmed by Adobe engineers.

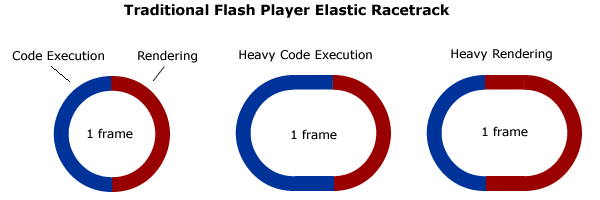

The basic premise of the original elastic racetrack is still the same. Given a specific frame rate to operate on, the Flash player will devote the first segment of the frame to execute code, and the second segment to render display objects. Either segment can grow its part of the racetrack to accommodate more processing and effectively extend the duration of the frame.

What changes from the previous model is how those segments look under a microscope and how they come together to form a ‘frame’.

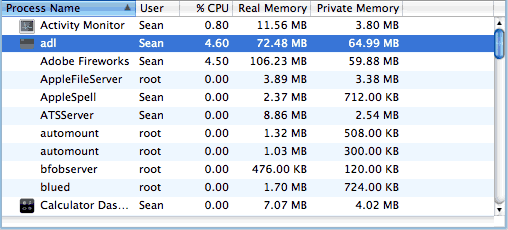

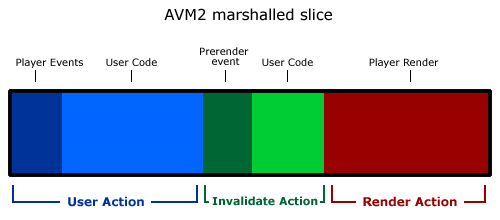

AVM2 is controlled by what I’m going to call the Marshal. The Marshal is responsible for carving out time slices for the Flash Player to operate on. Its important to clarify up front that these time slices are not the same thing as the framerate compiled into a swf, but we’ll see below how the player ultimately synthesizes a framerate from these slices. Running a Flex compiled swf within Firefox under Mac OS X, the Marshal appears to be carving out 19-20 millisecond slices, but this can be different between platforms and browsers based on what I’ve observed as well as Adobe employees have hinted at. This can also change depending on how the swf was compiled, see some of the comments below. For the sake of the article lets assume we’re only talking about a 20 millisecond slice to make the math easy. This means the Marshal will attempt to generate and execute no more then 50 slices each second, and it may be less depending on the elasticity of code execution or rendering. Inside each slice, 5 possible steps are processed in the following order.

- Player events are dispatched – This includes events dispatched by the Timer, Mouse, ENTER_FRAMEs, URLLoader, etc…

- User code is executed – Any code listening to events dispatched by step 1 are executed at this stage.

- RENDER event is dispatched – This special event is dispatched when the user calls stage.invalidate() during normal user code operation.

- Final user code is executed – User code listening specifically for step 3 is executed at this point.

- Player renders changes to the display list.

The Marshal executes this 20 millisecond slice over and over and decides on the fly which actions to run. The exact actions processed within a slice will ultimately derive the 2 main racetrack segments (code execution and rendering) that constitute a ‘frame’. User actions and Invalidation actions fill up the code segment track, while Render actions fill up the render segment track. Its important to note that actions will only occur at times predetermined by the Marshal, so a if you have a short running User action, the Marshal will still wait a few milliseconds before moving on to the Invalidate and Render actions.

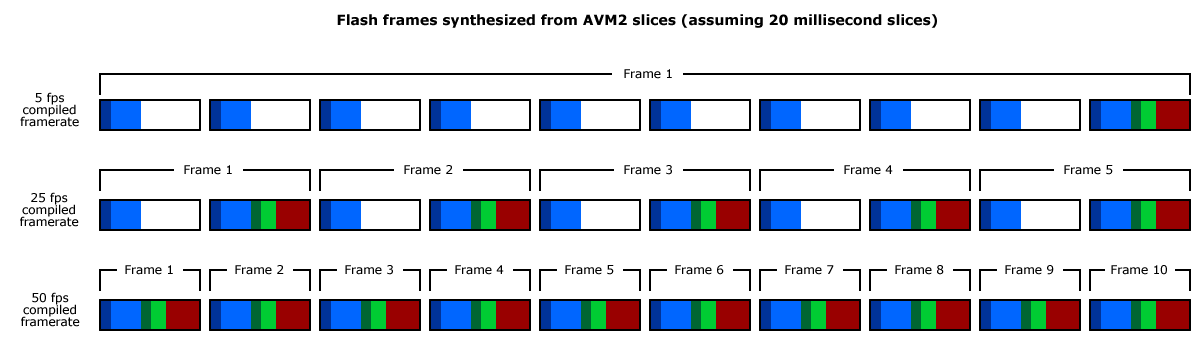

The best way to illustrate which actions are run and how the elastic racetrack is created, is to look at how those slices are processed on a swf running at 5 fps, 25, fps, and 50 fps.

As you can see, the elastic racetrack performs different actions per frame and requires a different visual illustration depending on the framerate that the player is trying to synthesize. So for a swf running at 5 fps, each frame processed 10 User actions, 1 Invalidation action, and 1 Render action. At 25 fps, each frame processed 2 User actions, 1 Invalidation action, and 1 Render action. At 50 fps, each frame processed 1 User action, 1 Invalidation action, and 1 Render action. Whats important to note in the above chart is that some events are only available in certain slices. For instance, the Event.ENTER_FRAME event will only ever be dispatched in a slice that occurs at the start of a frame.

So what does this all mean? Theres a couple quick ideas to take away from this.

- Long running code execution or render segments can extend a given slice beyond 20 milliseconds. Elasticity will be applied to that particular slice and the duration of the frame may or may not be extended as a result. The Marshal may drop the number of slices that constitute a frame in order to keep the active framerate close to the compiled framerate.

- A swfs real framerate won’t exceed the Marshals rate defined for the player instance. You can set your compiled framerate at 120fps, but Flash will still only process 50 slices max that generate 50 render calls (more or less depending on the system config).

- Code can be executed more often then the compiled framerate. A swf compiled at 1 fps can execute a Timer or Mouse event in every slice, even though it will only render in the last slice. Additionally, if you choose, you can render to the screen sooner then the compiled framerate by calling updateAfterEvent() , but only within a Mouse, Timer, or Keyboard event handler. In this instance though, the Marshal will consider that the end of the frame and start a new frame on the next slice. Lastly, Flash will force an automatic render when mousing over any Sprite that has had its visual properties (x,y,width,height,etc..) changed, naturally this still occurs at the end of the slice and any prerender logic will still run.

- Compiling a framerate that isn’t a multiple of the total number of slices per second for your platform will cause irregular rendering as it tries to divide up the slices. If you were to compile in a framerate of 20 on a platform executing 50 slices per second, then the player has to render 2 frames every 5 slices and would follow a 3-2-3-2-3-2 slice-to-render rate.

These 4 facts are moving targets though, since for this article we’re working on a 20 millisecond slice that’s processed 50 times per second. In reality you’ll see time slices as low as 5 milliseconds or as high at 100 milliseconds and some of the math will change as a result.

If you’d like to test this model for yourself, the easiest route is to create a swf running at 1 fps and another at 100 fps both with a Timer object set on a 1 millisecond interval. Inside the Timer event handler change the x property of a display object and hook a bunch of getTimer() traces up to different player events like Mouse, EnterFrame, and Render and watch the carnage unfold in your console. The rest of the information you can’t derive from the results comes from alot of context about the player I’ve learned over the past 2 years and so isn’t as easily visible. If anyone has any information to help add to or correct the above model, please submit it in the comments.

Thanks to several readers who have clarified some of the differences between Flex and Flash as well as how the Flash API is able to change the default behaviors described above.